Using the Azure Storage Emulator

As I'm gearing up to continue my series of posts on the subject of migrating an application to Azure, one of the things that occured to me was that the existing implementation has unit tests. Shouldn't be a surprise, right? I will need to work out a "whole solution" solution to unit tests somewhere along the line, I'll also need to look at integration tests and will probably at least think about some of the cool CI/Deployment things you can do with Azure. In the interim though, it occured to me that as I start migrating bits and bobs into Azure, running the associated unit tests will actually cost me money. It's true, having local infrastructure to run build servers, test instances, unit tests, all the different bits and pieces on costs money, however running unit tests on my local machine doesn't incur a direct and tangible cost. Swapping this out for Azure means that any unit test I run that (a) targets a component that's been moved out to Azure, and (b) isn't an indealised "perfect world" unit test that mocks everything bar the code under test will have a direct and tangible cost.

Putting unit tests to one side, each time I click certain buttons in the UI to see if they work, there will be a direct and tangible cost.

In mitigation of everything I've just written, for most things this cost will be a fraction of a fraction of a penny but it is a good excuse to explore some of the options that are out there to mitigate against this.

azure.com/free, and friends

Take a trip to https://azure.com/free to see what the offer consists of for your region. In the UK it's £150 credit to use within 30 days, then 12 months free:

- 750 VM Hours on Windows

- 750 VM Hours on Linux

- 5 GB Blob Storage

- 5 GB File Storage

- 250GB SQL Storage

- 2 x 64GB Managed Disks (SSDs for VMs)

- 5GB Cosmos DB

- 15GB Egress bandwidth

Now this is actually quite a lot, and then on top of that there's stuff that Microsoft lists as perpetually free (e.g. 1,000,000 Azure Function executions/month, 5GB Egress bandwidth).

There are other ways to manage costs, like the Microsoft Partner Network Action Pack which provides, again in the UK, £75/month of Azure credit (for internal / dev use) amongst lots of other goodies. As this costs c. £500, that's a significant cost-saving if that's the only benefit you use. I believe that some Visual Studio subscriptions also come with Azure credits, but haven't dug into that in detail myself.

Emulators, like the Azure Storage Emulator

Another option here is to explore using the Azure Storage Emulator. This runs on your local machine (download it from here: https://azure.microsoft.com/en-us/downloads/ - the download link is about half way down under "Command-line tools" -> "Azure Storage Emulator") and allows you to have something to talk to locally that quacks like Azure storage. Great for use in testing (automated, or otherwise) when you want to keep control over costs. Also great if you're sat on an airplane and want to work on your code - this is a "sore point" that going fully into using Azure resources to build solutions, rather than using Azure VMs can bring.

As you may've guessed from the title of this post the Azure Storage Emulator, specifically, is the focus of the post, so I'll get on with talking a bit about it. I downloaded it from Microsoft (see previous paragraph), ran the installer and got about as far as clicking "Next" on the screen that you'll see below before realising I'd already got it installed.

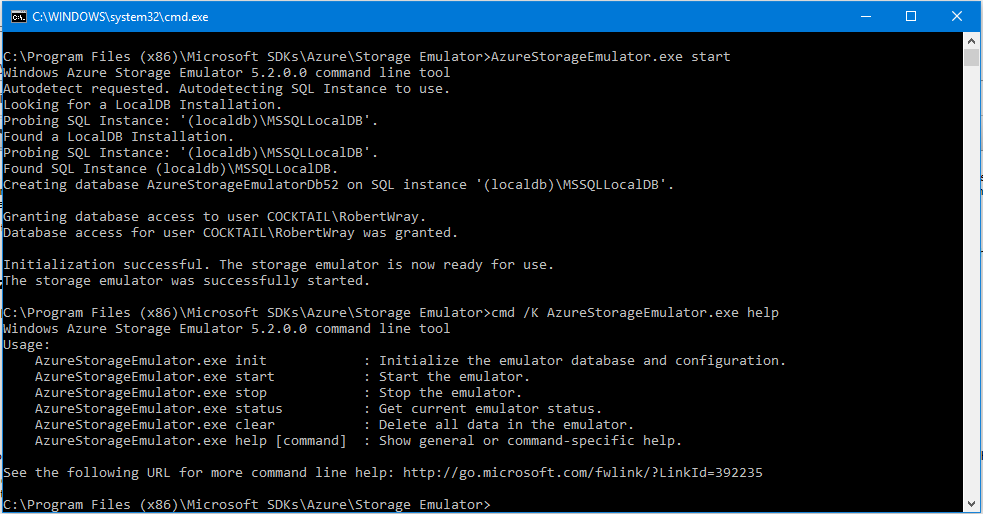

Running the Azure Storage Emulator

I'm not certain which of the things I have installed was responsible for bringing it onto my PC (my suspicion is Visual Studio 2017) but I can't face running its installer to try and work out if one of the Workloads calls out that it's included or not. Mainly because I'll then have to go through the "upgrade Visual Studio Installer" song and dance. Again. But it probably was Visual Studio. From having it installed (either already, or by installing it) it's a quick hop, skip and jump (via Cortana) to having it running.

Once you've run it up, you'll see a command window that looks very much like the one in the image right at the top of this post. Some bits will look a little different, like the name of the SQL instance being used for storage and the name of the user (you!) that's been granted access to the instance. You don't actually have to keep this window open as the command that gets run by that shortcut is actually a .cmd file, C:\Program Files (x86)\Microsoft SDKs\Azure\Storage Emulator\StartStorageEmulator.cmd specifically which consists of a couple of commands:

AzureStorageEmulator.exe start cmd /K AzureStorageEmulator.exe help

One to start the storage emulator and another to show some of the basic commands that the emulator supports. You can run "AzureStorageEmulator.exe stop" to stop the current instance. If you have closed the console, running the .cmd file will result in a message telling you that the emulator is already running.

Aside: Once you've got it up and running, you can use SQL Server Management Studio to have a poke around inside the SQL database that gets created to "back it" if you really want, just make a note of the database name on the line "Creating database NNNNNNNN on SQL Instance '(localdb)\MSSQLLocalDB'", connect SQL Server Management Studio to the instance (the text inside the single quotes) using Windows Authentication and have a look around in the named database. This isn't going to bear much, if any, relation to how Microsoft stores the data in "real" Azure (though you never know, Azure might just be SQL Server all the way down!) but if you want to have a poke-around, this is how.

One command in particular that's worth running is "AzureStorageEmulator.exe status" as this gives you a list of the URLs that the emulator is listening on.

>AzureStorageEmulator.exe status

Windows Azure Storage Emulator 5.2.0.0 command line tool IsRunning: True BlobEndpoint: http://127.0.0.1:10000/ QueueEndpoint: http://127.0.0.1:10001/ TableEndpoint: http://127.0.0.1:10002/

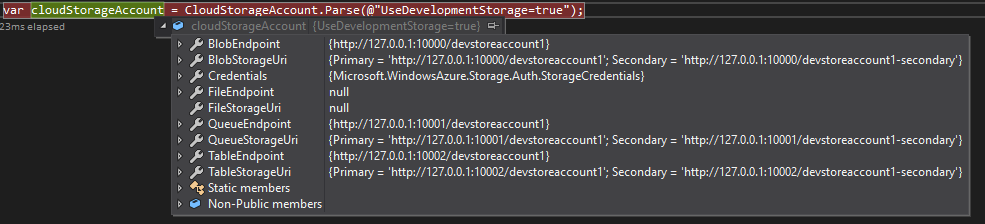

We don't actually need these, but it does show you what the Emulator is there and listening. The reason that we don't need them is that there's a special way of telling lots of things that talk to Azure that they should talk to the Emulator, and that's the "shortcut". This is quickly demonstrated by creating a new Console App in Visual Studio, adding a package reference to the package

WindowsAzure.Storage

and then using some of the code from my Copying/Moving a file in Azure blob storage post to end up with:

using Microsoft.WindowsAzure.Storage;

// I even named the app, no ConsoleApp341 here!

namespace ConnectoToStorageEmulator

{

class Program

{

static void Main(string[] args)

{

var cloudStorageAccount = CloudStorageAccount.Parse(@"UseDevelopmentStorage=true");

}

}

}

Setting a breakpoint and running the code so that we can inspect the cloudStorageAccount variable shows that the WindowsAzure.Storage package knows all about the emulator:

One important thing that this shows is never rely on the format or structure of an Azure storage URL. The ones for the Emulator are different and you will break if you rely on - for example - the first part of the path ("devstoreaccount1" here) being the name of the container. Some documentation may tell you that this is how the URL is composed, but always use one of the client libraries / wrappers / something that stops you from picking apart the URL yourself. If you don't whatever code you write may not work against the Emulator, which will frustrate you when you have a desire to work on it when you're one of those places where there's no Internet access.

I could take the rest of the code in the post and validate it, but I'm going to take a slightly simpler tack and create a blob, just to show it being done against the emulator. This doesn't really require much code and hopefully what the code does is clear enough that it doesn't need any explanation.

using Microsoft.WindowsAzure.Storage;

using System;

namespace ConnectoToStorageEmulator

{

class Program

{

static void Main(string[] args)

{

var containerName = Guid.NewGuid().ToString();

var blobName = Guid.NewGuid().ToString();

var cloudStorageAccount = CloudStorageAccount.Parse(@"UseDevelopmentStorage=true");

var blobclient = cloudStorageAccount.CreateCloudBlobClient();

var container = blobclient.GetContainerReference(containerName);

container.Create();

var blob = container.GetBlockBlobReference(blobName);

blob.UploadText("1234");

}

}

}

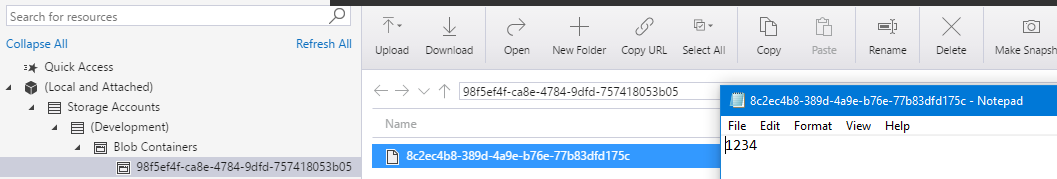

Short version - I'm creating a container, creating a blob inside it and then writing "1234" as text into the blob. Now that's run, lets have a little look in the Azure Storage Explorer and see if the data is in there! Once Storage Explorer has been loaded (you may need to close and re-open it, I find it doesn't always pick up new containers consistently unless I do) there should be a blob container with a GUID-ish name, containing a blob that also has a GUID-ish name, which consists of the text "1234":

(You could even follow the instructions in the aside earlier to drill-down into the SQL instance that's used by the Emulator and find where the blob is physically stored by the Emulator. Hint, it'll look something like this: c:\users\robertwray\appdata\local\azurestorageemulator\blockblobroot\1\43312ce8-c824-48ec-8267-1174f5a69cb3).

So, using the Emulator is very, very easy. But it does come with some limitations/differences which are called out in the documentation. Perhaps the biggest difference is that the Emulator doesn't support the File service / SMB protocol, so if you're using that in your application or library you'll want to put in your own emulation layer.